|

|

|

|

|

|

| Eye Movement Metrics Of Human Motion Perception And Search (Leland S. Stone) |

|

Overview

Visual display systems provide critical information to pilots, astronauts, and air-traffic controllers. The goal of this research project was to develop precise and reliable quantitative metrics of human performance based on non-intrusive eye-movement monitoring that can be used in applied settings. The specific aims were 1) to refine the hardware, optics, and software of eye-trackers to allow the non-intrusive acquisition of high temporal and high spatial precision eye-position data, 2) to measure quantitatively the links between eye-movement data and perceptual-performance data during tracking and search tasks, and 3) to develop biologically based computational models of human perceptual and eye-movement performance. Validated quantitative models of human visual perception and eye-movement performance assisted in designing computer and other display systems optimized for specific human tasks, in the development of eye-movement controlled machine interfaces, and in the evolution of artificial vision systems.

In FY97, considerable progress was made in the technical effort to improve the spatial and temporal resolution of infra-red video-based systems. In collaboration with ISCAN Inc., a high-speed infra-red video-based prototype eye-tracker was benchmarked to have a precision of 0.12° at a 240 Hz sampling rate, although with a limited range of ~±5°. In collaboration with Dr. Krauzlis at the National Eye Institute, benchmark data from the state-of-the-art invasive eye-tracker (an eye-coil system) was gathered for comparison.

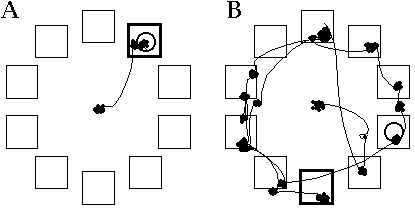

By measuring direction judgments and eye movements simultaneously, the use of signal-detection theory to predict the errors in direction judgments from eye movements was validated. Preliminary evidence suggested that the spatial integration rule for moving black and white luminance-defined targets used to drive tracking eye movements is not simple vector averaging and appears to be similar to that used for perception. However, perception and eye movements may not share the same motion processing for color and contrast-defined targets. In collaboration with Dr. Eckstein of the Cedars-Sinai Medical Center, signal-detection theory was also applied to a search (target-location) task. The perceptual judgments and eye movements follow similar trends. Fig. 1 shows that in an easy condition both the first eye movement and the final perceptual decision were correct, whereas in the hard condition both were incorrect. A computational model based on signal-detection theory was used to quantify these trends and to compare the amount of information about target location available for controlling eye movements and for the final perceptual decision. The situations under which the eye-movement data provides reliable information about the human observer's perceptual state are being identified by systematically measuring and comparing perceptual and eye-movement responses under a number of display and task conditions.

Figure 1. Search Task. The observer was given 4 seconds to find a disk target embedded in noise in one of 10 locations (squares). The bold square indicates the location of the target, the big open circle the final decision, and the small solid circles eye position during fixations. A. In this high signal-to-noise trial, both the first eye movement and the decision quickly indicated the correct location. B. In this low signal-to-noise trial, the eye examined many locations and the final decision was wrong.

Contact

Leland S. Stone

(650) 604-3240

leland.l.stone@nasa.gov |

|

|

|

|