|

|

|

|

|

|

| Dynamic Eye-Point Displays (Mary K. Kaiser & Walter W. Johnson) |

|

For an increasing number of vehicles, out-the-window displays are being replaced or augmented by synthetic display systems. Usually, these systems display imagery from video cameras, imaging sensors, or computer databases to emulate the perspective views pilots are accustomed to using for vehicular control. Numerous efforts are focused on ensuring the synthetic imagery contains sufficient image quality (e.g., resolution, contrast range) and content (e.g., level of detail) to substitute for out-the-window displays.

However, there is another important difference between optical windows and panel displays which has critical implications for user interaction. Windows are apertures; movement of the pilot's eyes relative to the aperture changes the pilot's viewing volume (i.e., field-of-view, center of field) through the window. Panel displays, on the other hand, show the same image regardless of the pilot's vantage point. Thus, even if a display panel has the same field-of-view as a cockpit window when measured at a given design eye point, it will not provide the same field-of-regard; the window affords the pilot a much greater "look around" capability.

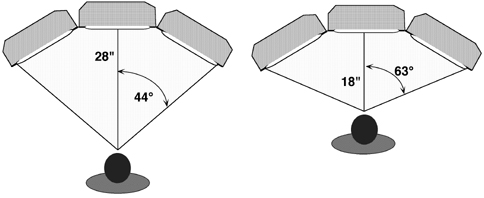

In our laboratory, we are developing a "virtual window" system suitable for flight deck environments. This system uses an unobtrusive sensor to monitor the pilot's eye position in three-dimensional space. The eye-point coordinates are passed to the computer image generator, which renders three perspective scenes appropriate for the three screens of the display. As shown in Figure 1, the field-of-view of the displays changes dynamically as a function of the viewing distance. Likewise, the center of projection will change appropriately if the viewer moves off the center axis. Such dynamic, real-time modification of the graphical eye point gives the rendered scene the same viewing geometry transformations that occur when looking through optical windows.

The current system is configured for computer generated imagery. In principle, the system can be adapted to video and sensor systems; in these cases, the head position data would be used to drive the camera/sensor servo-mount positions and lens focal length settings. Again, the coupling of camera/sensor position and eye point relative to the display panel would provide the synthetic display with the natural look-around capability of optical windows.

In addition to increasing field-of-regard capabilities in synthetic vision systems, this technology can be used to enhance the fidelity of aircraft simulators by allowing simulated contact displays to behave more veridically.

Figure 1. Dynamic eye-point displays alter the graphical viewing volume (field-of-field, center of field) appropriate to the viewer's current eye position. Here, for example, the field-of-view increases from 88 to 126 as the viewer moves closer to the displays. |

|

|

|

|