|

|

|

|

|

|

| Human Motion Perception: Error Metrics and Neural Models (Leland S. Stone) |

|

Overview

Pilots and astronauts need to make accurate judgments of their self-motion (or of the craft that they are controlling) to navigate safely and effectively. In many critical aerospace tasks, such as flying a helicopter at low altitude under low-contrast conditions or landing the shuttle after several weeks of adaptation to microgravity, human performance in self-motion estimation is pushed to its limits, yet any perceptual error could have disastrous consequences. The goal of this research project was to identify visual conditions under which humans are likely to make perceptual errors in visual motion judgments and to understand at the neural level why these errors occur, as part of a strategy to develop methods of preventing or mitigating them. The specific aims were 1) to develop predictive biologically-based models of human performance in heading estimation and related motion perception tasks, and 2) to identify empirically those conditions that lead to human error as part of an effort to test, refine, and validate some models, while ruling out others. The availability of validated quantitative models of human self-motion perception would aid in the design of training regimes for pilots, in the development of displays and automation systems that interact more effectively with human pilots while they fly air- and/or spacecrafts, and in the evolution of artificial vision systems based on the massively parallel architecture of the human brain.

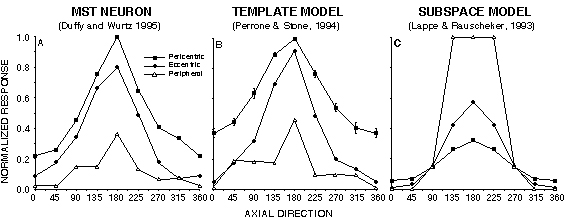

In collaboration with Dr. Thompson at the University of York in the United Kingdom, human errors associated with low-contrast motion stimuli (such as motion seen through fog) have been identified. In FY 97, by showing that flicker and speed perception errors have the opposite contrast dependence, the class of models of human speed perception that rely on flicker to derive motion can almost entirely be ruled out. In collaboration with Dr. Perrone at the University of Waikato in New Zealand, a neural "template" model of human visual self-motion estimation was developed in 1994. In FY 97, it was demonstrated that the neural elements within the Ames-developed template model can quantitatively mimic the response properties of neurons in MST, a visual processing area within the primate brain thought to underlie self-motion perception, while the neural units of subspace models cannot (Fig. 1). The template model also correctly predicts that, during self-motion along a curved path, humans perception will show a small bias in the direction of the turn, but will not show errors associated with discontinuities in the environmental layout (as is predicted by decomposition models).

Figure 1. A. The responses of a real neuron in area MST as a function of heading direction along a series of axial directions at three eccentricities. B. The responses of a template model "neuron" to the same set of visual stimuli. C. The response of a subspace model "neuron" to the same set of visual stimuli.

Contact

Leland S. Stone

(650) 604-3240

leland.l.stone@nasa.gov |

|

|

|

|